Making-of: depthcope

The making-of memo of this video.

Here’s source code:

… And below is the quick technical note.

Summary

This video is originally made for the Japanese TV Show, “TECHNE – The Visual Workshop“. Every episode introduces one visual technique such as stop-motion, then challenges creators to produce a short video using that technique. I’ve called to appear on the program as a video artist, and my theme in this ep is to use “rotoscope”. I had been thinking about how to extend the notion of rotoscope, and finally came up with this “3D rotoscope” technique.

In short, I made the 3d previs for rotoscoping in Cinema4D at first. It includes a 3d-scanned face with Kinect and geometrical forms which are a kind of unusual as a motif of clay animation. Then I rendered them as “depth map”, which represents a distance from the reference surface, such as a topographical terrain map.

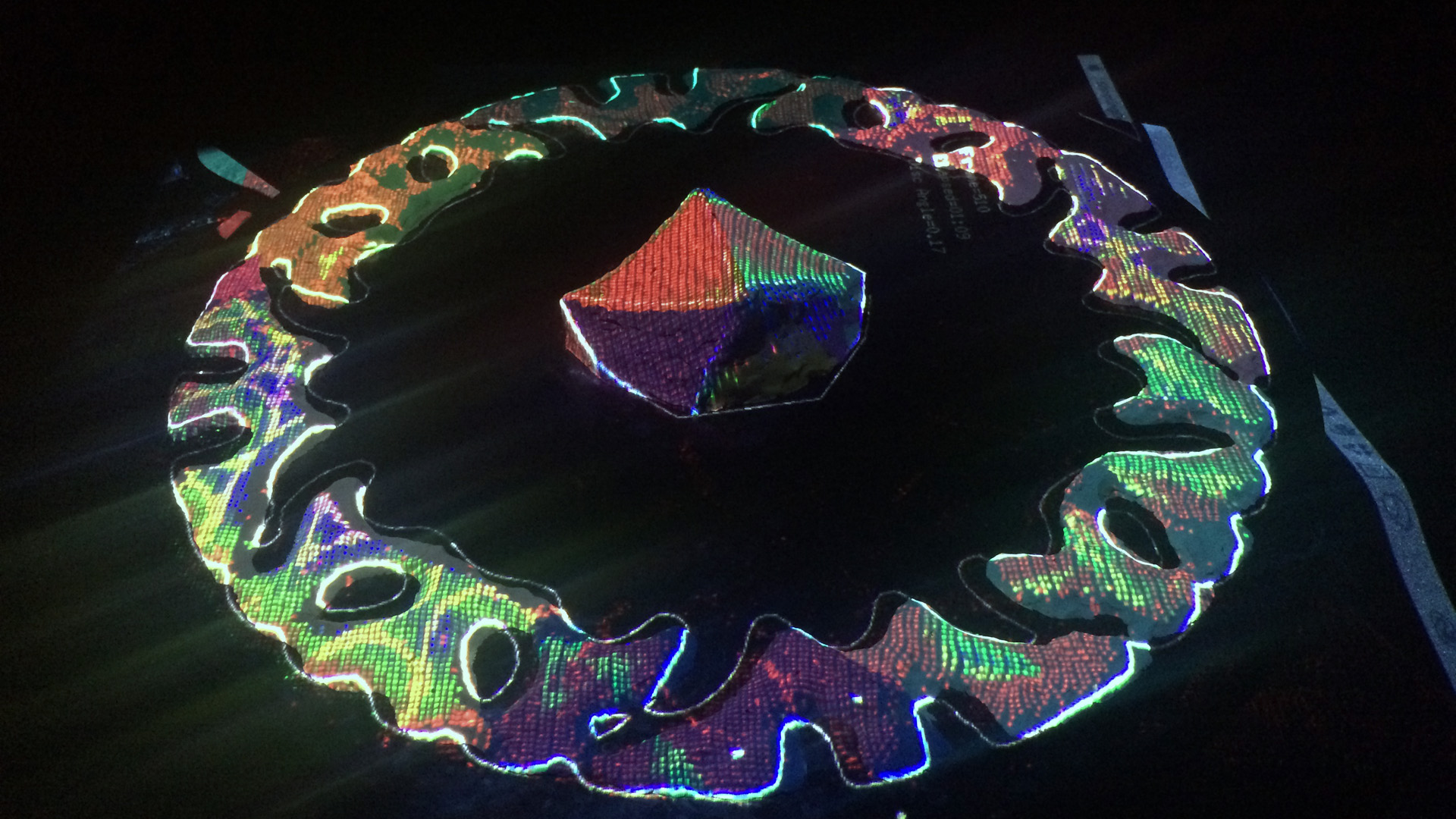

Next, I set a pair of Kinect and projector right above the rotating black table. I made the app that visualizes whether each point on a surface of the clay is higher or lower compared to the depth map, by colored lights of the projector. A part of the clay which is too higher turns red, and blue if it is lower. It turns green when it gets nearly right hight. Therefore, the form of the clay almost corresponds with the previs if all of the surfaces turn into green.

I mean we can trace the 3d previs with a clay by repeating this more than 500 times. Generally speaking, clay animations tend to be more handmade-looks and an analog one. However using such this workflow, I thought clays will move and metamorphose precisely like CG. In addition, I want to combine a highly automated system with such an old-style and laborious technique.

System

I used Dragonframe and EOS 7D for shooting stop-motion, openFrameworks for creating the shooting app. I adopt a Kinect V2, which partially works on mac.

Integration of Dragonframe and openFrameworks

Dragonframe only can send events by calling shell script with parameters, so I wrote the script which sends as OSC with Node.js.

Kinect-Projector Calibration

I used ofxKinectProjectorToolkitV2 for transformation from 3D Kinect coord to 2D projector coord. I calibrated with this sample in the addon. It exports calibration.xml which includes some coefficients.

The implementation of a transformation using the coefficients appears to be written ofxKinectProjectorToolkitV2::getProjectedPoint(), and I referenced it and rewrote it as the shader program to make it more efficient.

Converting to the Disc Coordinate

I realized a need for converting between the disc coordinate (whose origin is positioned at the center of the disc) and the Kinect coordinate (at IR camera) so I implemented the system to set an X-axis and Y-axis on the disc, like below gif:

Shooting

It was so tiring😭

References

Others

I made the mount for the projector and Kinect. Modeled with Fusion 360, and cut the parts from MDF with my CNC milling machine. Honestly, it was most exciting time than any other processes lol

I had planned to control also the rotating table, but it was failed because of a shortage of torque of a stepper motor 😨 I have to study about mechanic more.

Incidentally, I wanted to mix iron sand and a glitter into the clay to make it more variant texture. Below picture is the experiment for it.

And I also found the problem that the depth recognition turns unstable when someone stands very close to a Kinect. To measure the height precisely we have to go on standing the same position. After all, we had referenced the guides and contours which rendered with Cinema4D additionally. I’d like to find a smarter way.

Although there are many reflection points, it was so interesting to make such an experimental work with experimental workflow.

I think the Kinect community should be hotter. Until a few years ago there are so many digital arts using Kinect whose visual looks like “Minority Report”, and it had got out of date. Many creative coders are now working on VR or deep learning field. However, I think there should be a lot of undiscovered ways to use a depth camera, whether using its depth image to visualize directly or not. So I’d like to go on digging it.

Anyway, I really don’t want to touch clay anymore. At least 10 years…